It used to be that I had to seek out instances of overreaching Facebook censorship. Now, thanks to loads of recent high-profile examples and increased popular interest in the topic, they fall right into my lap. On BoingBoing today, Richard Metzger, a self-described “married, middle-aged man,” writes that he became a spokesman for gay rights overnight when Facebook deleted a photo he had posted of two gay men kissing.

After posting the photo, one friend of many had left a homophobic comment, most likely reporting the image as a TOS violation. Despite the fact that Metzger’s other friends jumped in to defend the image and take down the homophobe, Facebook’s automated systems (or, worse, one of their staff members) plucked the image, sending Metzger the usual automated message. Metzger writes:

According to Facebook’s FAQ on matters like this, EVERY claim of “abusive” posts is investigated by an actual live human being. If we take them at their word, it wasn’t automatically deleted.

My assumption is that “Jerry” complained and that perhaps a conservative or religious person working for Facebook –maybe it was an outsourced worker in another country, I can’t say–got that case number, looked at it for a split second, vaguely (or wholeheartedly, who can say?) agreed with “Jerry” (or it was just “easier” to “agree” with him as a matter of corporate policy) dinged it and moved on. I doubt that there was very, very little thought given to the matter. “Delete” and move on to the next item of “abusive material” on the list.

Metzger also markedly notes:

The real problem here is certainly not that Facebook is a homophobic company. It’s that their terrible corporate policy on censorship needs to stop siding with the idiots, the complainers and the least-enlightened and evolved amongst us as a matter of business expediency!

Indeed. As others have pointed out, this takedown has resulted in a veritable Streisand effect, pushing this story beyond a local issue and into the stratosphere. Similarly, Facebook’s removal of page calling for a third Palestinian intifada has resulted in literally dozens of copycat pages and groups, making it increasingly difficult for Facebook to enforce their terms of service without bias (which is to say: Facebook claims they only took down the page after spotting actual incitement; with few Arabic-speaking staff members, it’ll be nearly impossible to do the same for each page).

Ultimately, this is not an issue of Facebook bias, but of a poorly implemented community policing system. As I said in my talk at re:publica XI, community policing may simply not be the answer. Metzger ended up with a positive outcome in this case, but no thanks to Facebook’s lack of robust processes. In fact, I would hedge my bets that he received the response he did because the story had already blown up as it did.

I’m no longer convinced of the system. I’ve long expressed the feeling that community policing is skewed against activists and the semi-famous, but examples like this illustrate that it’s worse than I thought. No, Facebook is not inherently homophobic, but this case shows that either they’re lying about automated processes or their review staff are poorly trained. In either case, anyone who posts a photo or video that borders on contentious is at risk of seeing their content removed.

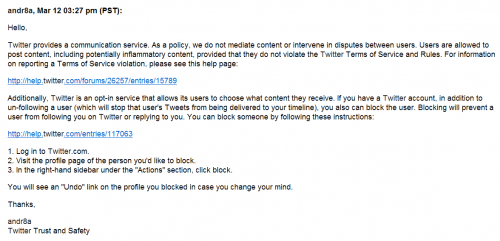

Now, this last bit feels like an addendum when in fact it deserves an entire post, but I want to highlight an example of a company that doesn’t really moderate content, and what that means. Twitter, which has built a reputation on defending its users, has stated to users time and time again that unless content is strictly in violation of their TOS, they won’t touch it. In many cases, this has included contentious content that sits somewhere in the grey area.

My colleague and friend Ethan Zuckerman recently shared an extremely contentious example of this with me; after spotting clear calls for violence against Christians in Nigeria on Twitter, he emailed a staffer at Twitter out of concern. The Twitter staffer expressed shared concern, but stated that Twitter doesn’t moderate content. After chatting with Ethan, we ultimately realized that this was probably best: If Twitter were to take that account down, there’s no telling how many would pop up in its place. When Ethan shared the verdict, as well as the results of our discussion with a friend in Nigeria, he told me that the friend came around on the issue too.

As Supreme Court Justice Louis Brandeis advised, in his famous Whitney v. California opinion in 1927, “If there be time to expose through discussion the falsehood and fallacies, to avert the evil by the processes of education, the remedy to be applied is more speech, not enforced silence.”

36 replies on “On Facebook’s deletion of a gay kiss (or why community policing doesn’t work)”

Ultimately, this is not an issue of Facebook bias, but of a poorly implemented community policing system.

Facebook is a company that, while still new, has a LOT of resources courtesy of Goldman Sachs and others. I agree that it is not an issue of bias, but it is an indicator of Facebook priorities – an enlightened policing system does not seem high on the list.

[…] On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) (jilliancyork.com) /* Posted in Love & Sex « Babies And The Sense Of Touch: Make Your Own Comforter You can leave a response, or trackback from your own site. […]

[…] On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) (jilliancyork.com) […]

I can relate to this article as I too have had a photo removed for the same reason as mentioned, somebody in my contacts has obviously not liked it so they reported it. Its beyond me as to why, as pretty much everyone on my list knows I am openly gay. I think facebook needs to update their policies and deal with matters that are a lot worse like the paedophiles that scour their site.

сериалы lostfilm

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

читать комиксы

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

фильмы онлайн

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

сериалы 2022 года

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

комиксы онлайн

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

zfilm-hd.net

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

lostfilm

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Сериалы 2022 онлайн

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Фильмы 2021 онлайн zhd.life

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

мультфильмы 2021 онлайн

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Фильмы онлайн lostfilm

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Мультики 2022 онлайн

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

онлайн lostfilm фильмдері

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

мына жерден қараңыз

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

марвел комикстері

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

2022 онлайн lostfilm сериялары

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Комиксы про Супермена

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

комикстерді тегін жүктеу

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

дивитися тут безкоштовно lostfilm

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Фильмы без регистрации и смс lostfilm

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Читать Наруто

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Сериалы онлайн lostfilm

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Скачать комиксы бесплатно

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Сериалы онлайн

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

2022 фильмы онлайн lostfilm

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

скачати безкоштовно мангу

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Комиксы о Бэтмене

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

homepage

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Darryl Bateman

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

Jual Scrub sink

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York

out of the following which is not in the top 5 worldwide jewelers cartier

blog topic

free game codes giveaway – Playnotpay.Net

On Facebook’s deletion of a gay kiss (or why community policing doesn’t work) – Jillian C. York